High Performance Computing & IT

Theoretical, modeling and Computational Plasma Physics

The Institute has a vibrant programme involving theoretical analysis and computer simulations for plasma systems. This covers basic research as well as plasma applications. Continuing with its long-standing focus on high performnce computing facilities, IPR has now set up a 1 Petaflop HPC facility

IPR has established a High Performance Computing (HPC) facility having a theoretical peak performance of 1 Petaflop (PF). This HPC system named ANTYA (meaning 1015 in Sanskrit), having more than 10,000 cores, can perform 1015Floating-point Operations Per Second (FLOPS). The installa-tion, testing, and commissioning of ANTYA were completed successfully in July 2019. ANTYA is being used by the R&D community of the Institute, exploiting the power of parallel computing for solving complex tasks. Extensive scaling stud-ies of open-source codes (LAMMPS and PLUTO) have been completed using full-machine capabilities. The scaling up to 10,000 cores has been demonstrated for these highly scalable open-source codes. In-house developed codes, as well as col-laboration based codes, have been successfully installed to run in a distributed environment on ANTYA. Heavily used commercial engineering applications have also been ported successfully on ANTYA.

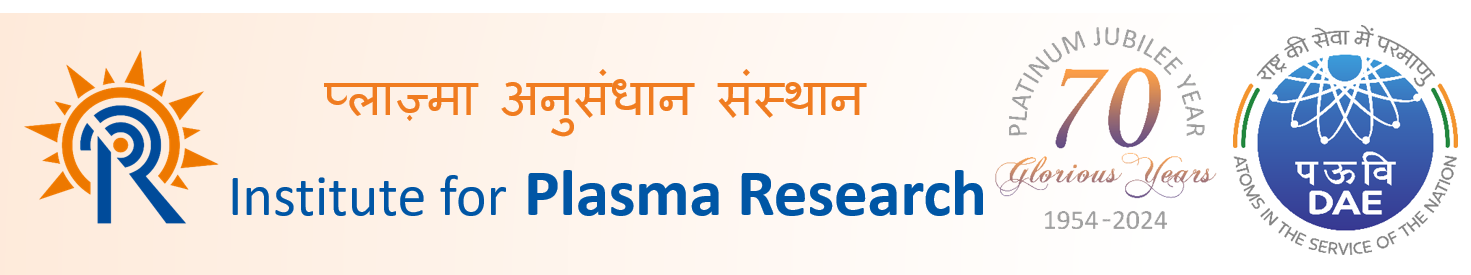

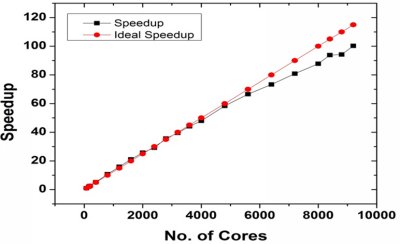

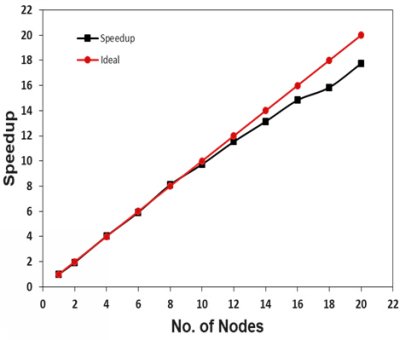

Scaling Studies of Codes Performed on ANTYA CPU and GPU nodes separately: LAMMPS (Large-scale Atomic/Molecular Massively Parallel Simulator) and PLUTO are open-source General Public License (GPL) codes used for Molecular Dynamics (MD) and Computational Astrophys-ics simulations respectively by IPR Researchers. On AN-TYA, LAMMPS has been installed for both MPI version (LAMMPS CPU) and GPU version (LAMMPS GPU). Fig-ure A.5.1.1a shows the LAMMPS scaling study performed in ANTYA for a crystal equilibration with about 2 billion atoms via lenard-jones type potential. For LAMMPS GPU only scaling study shown in figure A.5.1.1b, 10.5 million atoms have been selected based on the GPU memory limit. For PLUTO scaling study, an RMHD blast problem con-sidered where the average Mach number is to be estimated using RMHD equations. Two sets of 3-D calculations were performed, on 512 and 812 grid sizes. Figure A.5.1.1c shows the speedup comparison of these two problems with the ideal speedup. Speedup obtained in the 812 grid size problem is better than the 512 grid problem. A total of 230 nodes has been used for performing the scaling runs. Further several other open-source codes like OpenFOAM, NAMD, Darknet-YOLO, Bout++, etc. with data analysis and visualization ap-plications like VisIt, ParaView have been installed and tested on ANTYA. The table list the codes (indigenous/scientific and commercial) which have been ported to ANTYA and are in regular use.

Figure A.5.1.1a Actual Speedup obtained vs. Ideal Speedup for LAMMPS CPU on ANTYA CPU nodes for more than 2 billion atoms

|  Figure A.5.1.1b Actual Speedup obtained vs. Ideal Speedup for LAMMPS GPU on ANTYA GPU nodes for 10.5 million atoms.

|

Figure A.5.1.1c: Speedup comparison of 512/812 grid size

problems with the ideal speedup for PLUTO

|

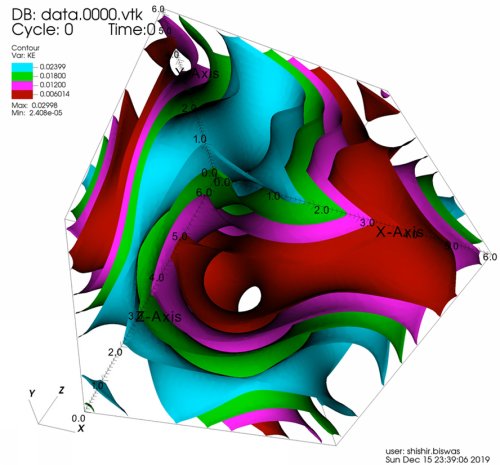

Figure A.5.1.2a: Recurrent and Non-Recurrent Flow Simulation with PLUTO Code

|

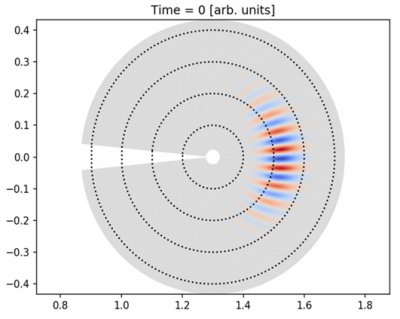

Figure A.5.1.2b Effect of Flow-Shear on ITG Instability in a Tokamak using Bout++

|

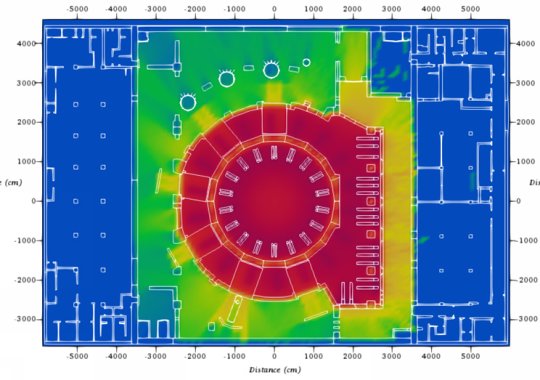

Figure A.5.1.2c Biological Dose Rate Map in Tokamak Complex (Modeling of highly complex radiation sources in ITER environment is performed under Task Agreement C74TD22FI between ITER and IN-DA)

|

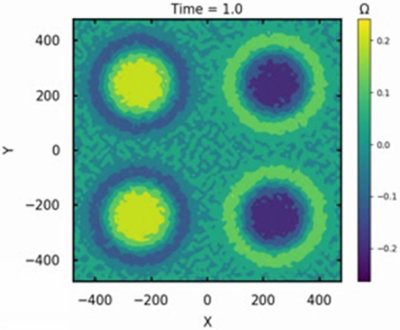

Figure A.5.1.2d Large Scale MD Simulation with 1 Million Particles using Upgraded Multi-GPU MPMD code

|

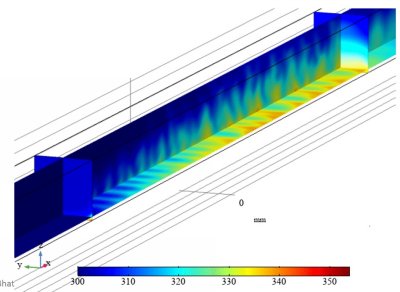

Figure A.5.1.2e Magneto hydrodynamic Flow in Liquid Breeder Blankets Using COMSOL

|

- High-Performance Computing (HPC, 1 Peta flops) System

- "GANANAM" HPC Newsletter